We’ve reached an interesting inflection point in the history of Artificial Intelligence that is worth marking. For the first time, the general public has enough awareness of AI to have formed strong opinions / preferences about how it should be used while being completely divorced from any real understanding about how the technology itself works.

This isn’t new or unique to AI (go look at your microwave and tell me you don’t have strong opinions about how it should work while also having zero understanding about how it actually works), but it speaks to the rapid level of adoption that AI has recently seen. Once a technology is packaged into a product that the world accepts, understanding is no longer required. Welcome to the Eternal September of Artificial Intelligence.

People are very hot or cold on AI right now, and when knowledgeable people that I deeply respect are upset, it’s always prudent to listen. In this series we’ll endeavor to separate the hype, the marketing, and the sheer frustration of this moment and unpack it together.

So how did we get to this point?

The short answer is: ChatGPT. Released in 2022, this marked the beginning of cutting edge AI technology being condensed into a form that people actually wanted to use. While an overly-chummy chatbot is a considerably less dramatic introduction of Artificial Intelligence to human history than some predicted, it’s what reality yielded.

Three years on from ChatGPT’s launch, the technology has evolved beyond chat to incorporate speech, vision, hearing, reasoning, image / audio / video generation, and more at a rapid clip. New capabilities, competitors, and offshoots are being added at such a dizzying speed that it is impossible to be comprehensive in listing them here. But are consumers actually using these features? Do these features actually provide the utility that they promise? Or are we wasting an inordinate amount of energy and GPU cycles without a justifiable reason?

It’s tough to separate hype from capability, especially when you’re examining it from within the moment of adoption itself. Instead, let’s compare three years of AI productization against another technology: the smartphone.

In terms of a technical product, the smartphone represents the gold standard for both mass adoption and impact on the world. There was virtually no consumer market for smartphones in 2006 (though enterprise was having a moment with RIM’s Blackberry / PalmOS), and today they are near ubiquitous, with 98% of the US population owning and using a smartphone on a daily basis.

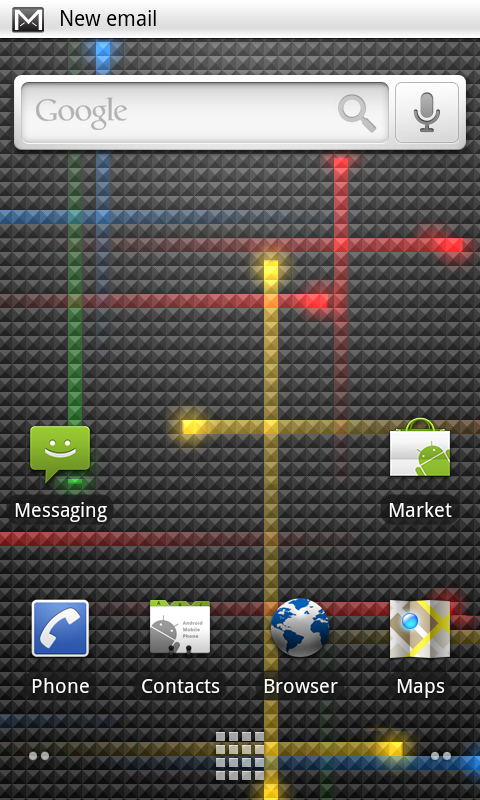

With the first iPhone coming out in 2007 and the first Android phone launching a year later, adding three years to ChatGPT’s initial launch would land our metaphor in 2010: the “iPhone 4 versus Nexus One” era.

For those unfamiliar with this era, it looked like this:

We had not yet figured out all of the killer features that smartphones had to offer. There were bugs. There were caveats. There was launch drama.

Smartphones were not perfect in 2010, but enough pieces of the journey were there to entice both users and developers. Uber was rounding out its first year of business, but DoorDash, Instacart, and other app behemoths were still years away from existing. Angry Birds topped the app charts on iOS, while Android’s app ecosystem was still developing and trying to break into a market largely dominated by Apple.

Only one in four adults owned a smartphone in 2010, but it was clear that trends were pointing towards mass adoption. In the last decade and a half, we’ve seen that number steadily increase as the smartphone journey at the intersection of hardware, software, and overall experience improves.

So how does Artificial Intelligence stack up to our smartphone comparison? Better than I would have initially thought. Compared to the 25% smartphone adoption rate among US adults in 2010, 34% of US adults have used ChatGPT (with the 18-29 demographic peaking at 58%) in 2025. Many AI use cases have a lower barrier to entry than smartphones in 2010 ($500 or more for the device itself), so it has been easier for users to gain access and experience with AI by comparison.

And like 2010, the market appears to be moving from one that has arguably been defined by a single company (Apple then, OpenAI now) to one which is being shaken by the entry of a viable competitor (Google then and now). For users, this is a wonderful time to sit back, observe, and watch these companies furiously battle for dominance of an emerging market. For developers, this is also a great moment to check out the flurry of activity happening in the ecosystem and marvel at the sheer volume of capabilities becoming available daily (for burgeoning AI devs, the Qwen and Unsloth repositories on HuggingFace are both well worth checking out).

Just like with smartphones, today’s small demos and incremental features may find their way into becoming tomorrow’s behemoths. Whether you like AI or not, the impact is here to stay and these capabilities won’t be going anywhere.

But adoption is not equal to utility. People are using AI systems and are willing to incorporate it into their lives in at least some way, but we are far from understanding the long-term trade-offs of them doing so. We will be writing the legislative and policy rulebooks for a lot of GenAI systems at a pace that naturally lags behind capability and longitudinal studies.

We’re stuck with it. So what do we do about it?

As veterans of the browser wars and the early days of online safety, this has felt like a familiar dynamic to many of us at Pelidum: A new technology, which itself is agnostic in purpose, finds mass adoption in the best and worst ways simultaneously. In the past, we have solved this across the tech industry by pairing data, policy, and process in equal measure until we feel the problem is at least manageable.

The nature and pace at which Generative AI technology has been adopted has challenged this core approach to the limit, and we’d like to start sharing some of the hard lessons we’ve learned in the last year in case they’re helpful to others.

Over the next few weeks, we’ll be sharing deep dives on these topics:

- The Basics: Terminology, technology and common grounding. How do we demystify what “AI” is and gain shared understanding across tech teams, safety teams, and product / marketing. Where can you run AI systems? How can you prove that they work for your actual use cases?

- Moving beyond “chat”: If you’ve only consumed AI as a chat bot, you might be thinking: “So what?” How do you actually use AI to get things done? What is it good and bad at? We’ll also run through some practical demos / free AI tooling you can run at home.

- AI at home: If you’re concerned about privacy or want to quantify / offset your own environmental impact, what does it take to run AI models yourself? Is the quality good enough to even justify the time investment?

- Lessons from a year of AI safety evals: At Pelidum, we have been testing the actual efficacy of AI in safety classification for local versus remote and a number of scenarios / harm verticals / modalities. We’ll dive into some of the trends and results we’ve seen over time and where we’ve seen these systems fail / succeed.

If you or your team are working along similar lines, we would love to hear your thoughts or collaborate. Please feel free to reach out to Peter, Liam, or myself directly on LinkedIn or shoot us an e-mail at info@pelidum.com.

Mike Castner

CTO, Pelidum

Interested in learning more?

Book some time with us for more information on what we do and how we can help your organisation